Keynote Presentation – DFMA for Discontinuous Improvement and Innovation

Tuesday, June 15th, 2010

Tuesday, June 15th, 2010

9:30 a.m. to 10:30 a.m.

Keynote Presentation

2010 INTERNATIONAL FORUM on Design for Manufacturing and Assembly

Providence, Rhode Island, USA

DFMA for Discontinuous Improvement and Innovation

Abstract

The literature is full of examples of companies using DFMA to design lower cost products. Though the savings are radical in magnitude, there is a general misconception that DFMA is most like continuous improvement work with regular installments of small improvements. This thinking does DFMA an injustice, as the DFMA process drives creative solutions, radical changes, discontinuous improvement, and innovation. The paper describes how to use DFMA to define areas for discontinuous improvement and innovation, how to create a design approach, and how to define and execute a project plan to achieve radical improvement.

Presenter

Dr. Mike Shipulski

For the past six years as Director of Engineering at Hypertherm, Inc., Mike has had the responsibilities of product development, technology development, sustaining engineering, engineering talent development, engineering labs, and intellectual property. Before Hypertherm, Mike worked in a manufacturing start‐up as the Director of Manufacturing and at General Electric’s R&D center as a Manufacturing Scientist during the start‐up phase of GE’s Six Sigma efforts. Mike received a Ph.D. in Manufacturing Engineering from Worcester Polytechnic Institute. Mike is the winner of the 2006 DFMA Supporter of the Year, and has been a keynote presenter at the DFMA Forum since 2006.

Pareto’s Three Lenses for Product Design

Axiom 1 – Time is short, so make sure you’re working on the most important stuff.

Axiom 1 – Time is short, so make sure you’re working on the most important stuff.

Axiom 2 – You can’t design out what you can’t see.

In product development, these two axioms can keep you out of trouble. They’re two sides of the same coin, but I’ll describe them one at a time and hope it comes together in the end.

With Axiom 1, how do you make sure you’re working on the most important stuff? We all know it’s function first – no learning there. But, sorry design engineers, it doesn’t end with function. You must also design for lean, for cost, and factory floor space. Great. More things to design for. Didn’t you say time was short? How the hell am I going to design for all that?

Now onto the seeing business of Axiom 2. If we agree that lean, cost, and factory floor space are the right stuff, we must “see it” if we are to design it out. See lean? See cost? See factory floor space? You’re nuts. How do you expect us to do that?

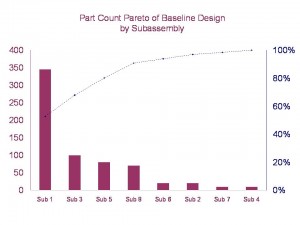

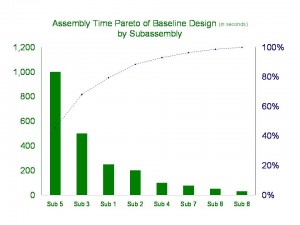

Pareto to the rescue – use Pareto charts to identify the most important stuff, to prioritize the work. With Pareto, it’s simple: work on the biggest bars at the expense of the smaller ones. But, Paretos of what?

There is no such thing as a clean sheet design – all new product designs have a lineage. A new design is based on an existing design, a baseline design, with improvements made in several areas to realize more features or better function defined by the product specification. The Pareto charts are created from the baseline design to allow you to see the things to design out (Axiom 2). But what lenses to use to see lean, cost, and factory floor space?

Here are Pareto’s three lenses so see what must be seen:

To lean out lean out your factory, design out the parts. Parts create waste and part count is the surrogate for lean.

To design out cost, measure cost. Cost is the surrogate for cost.

To design out factory floor space, measure assembly time. Since factory floor space scales with assembly time, assembly time is the surrogate for factory floor space.

Now that your design engineers have created the right Pareto charts and can see with the right glasses, they’re ready to focus their efforts on the most important stuff. No boiling the ocean here. For lean, focus on part count of subassembly 1; for cost, focus on the cost of subassemblies 2 and 4; for floor space, focus on assembly time of subassembly 5. Leave the others alone.

Focus is important and difficult, but Pareto can help you see the light.

Workshop on Systematic DFMA Deployment

Monday, June 14th, 2010

Monday, June 14th, 2010

1:00 p.m. to 5:00 p.m.

Pre‐Conference Workshop

2010 INTERNATIONAL FORUM on Design for Manufacturing and Assembly

Providence, Rhode Island, USA

Systematic DFMA Deployment

Real‐World Implementation and Hard Savings Make the Difference

Systematic DMFA Deployment is a straightforward, logical method to design out product cost and design in product function. Whether you want to learn about DFMA, execute a single project, implement across the company, or convince company leaders of DFMA benefits, this workshop is for you.

Systematic DMFA Deployment workshop attendees will learn how to:

- Select and manage projects

- Define resources and quantify savings

- Communicate benefits to company leadership

- Coordinate with lean and Six Sigma

- Get more out of DFMA software

Once you understand the principles of the Systematic DFMA Deployment milestone‐based system, you

will focus on activities that actually reduce product cost and avoid wheel‐spinning activities that create

distraction.

Workshop Fee: $95

Presenter

Dr. Mike Shipulski

For the past six years as Director of Engineering at Hypertherm, Inc., Mike has had the responsibilities of product development, technology development, sustaining engineering, engineering talent development, engineering labs, and intellectual property. Before Hypertherm, Mike worked in a manufacturing start‐up as the Director of Manufacturing and at General Electric’s R&D center as a Manufacturing Scientist during the start‐up phase of GE’s Six Sigma efforts. Mike received a Ph.D. in Manufacturing Engineering from Worcester Polytechnic Institute. Mike is the winner of the 2006 DFMA Supporter of the Year, and has been a keynote presenter at the DFMA Forum since 2006.

Blind To Your Own Assumptions

Whether inventing new technologies, designing new products, or solving manufacturing problems, it’s important to understand assumptions. Assumptions shape the technical approach and focus thinking on what is considered (assumed) most important. Blindness to assumptions is all around us and is a real reason for concern. And the kicker, the most dangerous ones are also the most difficult to see – your own assumptions. What techniques or processes can we use to ferret out our own implicit assumptions?

Whether inventing new technologies, designing new products, or solving manufacturing problems, it’s important to understand assumptions. Assumptions shape the technical approach and focus thinking on what is considered (assumed) most important. Blindness to assumptions is all around us and is a real reason for concern. And the kicker, the most dangerous ones are also the most difficult to see – your own assumptions. What techniques or processes can we use to ferret out our own implicit assumptions?

By definition, implicit assumptions are made without formalization, they’re not explicit. Unknowingly, fertile design space can be walled off. Like the archeologist digging on the wrong side of they pyramid, dig all he wants, he won’t find the treasure because it isn’t there. Also with implicit assumptions, precious time and energy can be wasted solving the wrong problem. Like the auto mechanic who replaced the wrong part, the real problem remains. Both scenarios can create severe consequences for a product development project. (NOTE: the notion of implicit assumptions is closely related to the notion of intellectual inertia. See Categories – Intellectual Inertia for a detailed treatment.)

Now the tough part. How to identify your own implicit assumptions? When at their best, the halves of our brains play nicely together, but never does either side rise to the level of omnipotence. It’s impossible to stand outside ourselves and watch us make implicit assumptions. We don’t work that way. We need some techniques.

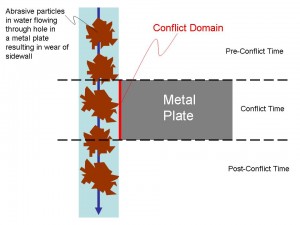

Narrow, narrow, narrow. The probability of making implicit assumptions decreases when the conflict domain is narrowed.

Narrow in space and time to make the conflict domain small and assumptions are reduced.

Narrow the conflict domain in space – narrow to two elements of the design that aren’t getting along. Not three elements – that’s one too many, but two. Narrow further and make sure the two conflicting elements are in direct physical contact, with nothing in between. Narrow further and define where they touch. Get small, really small, so small the direct contact is all you see. Narrowing in space reduces space-based assumptions.

Narrow the conflict domain in time – break it into three time domains: pre-conflict time, conflict time, post-conflict time. This is a foreign idea, but a powerful one. Solutions are different in the three time domains. The conflict can be prevented before it happens in the pre-conflict time, conflict can be dealt with while it’s happening (usually a short time) in the conflict time, and ramifications of the conflict can be cleaned up in the post-conflict time. Narrowing in time reduces time-based assumptions.

Assumptions narrow even further when the conflict domain is narrowed in time and space together, limiting them to the where and when of the conflict. Like the intersection of two overlapping circles, the conflict domain is sharply narrowed at the intersection of space and time – a small space over a short time.

It’s best to create a picture of the conflict domain to understand it in space and time. Below (click to enlarge) is an example where abrasives (brown) in a stream of water (light blue) flow through a hole in a metal plate (gray) creating wear of the sidewall (in red). Pre-conflict time is before the abrasive particles enter the hole from the top; conflict time is while the particles contact the sidewall (conflict domain in red); post-conflict time is after the particles leave the hole. Only the right side of the metal place is shown to focus on the conflict domain. There is no conflict where the abrasive particles do not touch the sidewall.

Even if your radar is up and running, assumptions are tough to see – they’re translucent at best. But the techniques can help, though they’re difficult and uncomfortable, especially at first. But that’s the point. The techniques force you to argue with yourself over what you know and what you think you know. For a good start, try identify the two elements of the design that are not getting along, and make sure they’re in direct physical contact. If you’re looking for more of a challenge, try to draw a picture of the conflict domain. Your assumptions don’t stand a chance.

With Innovation, It’s Trust But Verify.

Your best engineer walks into your office and says, “I have this idea for a new technology that could revolutionize our industry and create new markets, markets three times the size of our existing ones.” What do you do? What if, instead, it’s a lower caliber engineer that walks into your office and says those same words? Would you do anything differently? I argue you would, even though you had not heard the details in either instance. I think you’d take your best engineer at her word and let her run with it. And, I think you’d put less stock in your lesser engineer, and throw some roadblocks in the way, even though he used the same words. Why? Trust.

Your best engineer walks into your office and says, “I have this idea for a new technology that could revolutionize our industry and create new markets, markets three times the size of our existing ones.” What do you do? What if, instead, it’s a lower caliber engineer that walks into your office and says those same words? Would you do anything differently? I argue you would, even though you had not heard the details in either instance. I think you’d take your best engineer at her word and let her run with it. And, I think you’d put less stock in your lesser engineer, and throw some roadblocks in the way, even though he used the same words. Why? Trust.

Innovation is largely a trust-based sport. We roll the dice on folks that have already put it on the table, and, conversely, we raise the bar on those that have not yet delivered – they have not yet earned our trust. Seems rational and reasonable – trust those who have earned it. But how did they earn your trust the first time, before they delivered? Trust.

There is no place for trust in the sport of innovation. It’s unhealthy. Ronald Reagan had it right:

Trust, but verify.

As we know, he really meant there was no place for trust in his kind of sport. Every action, every statement had to be verified. The consequences so cataclysmic, no risk could be tolerated. With innovation consequences are not as severe, but they are still substantial. A three year, multi-million (billion?) dollar innovation project that returns nothing is substantial. Why do we tolerate the risk that comes with our trust-based approach? I think it’s because we don’t think there’s a better way. But there is. What we need is some good, old-fashioned verification mixed in with our innovation.

When the engineer comes into your office and says she can reinvent your industry, what do you ask yourself? What do you want to verify? You want to know if the new idea is worth a damn, if it will work, if there are fundamental constraints in the way. But, unfortunately for you, verification requires knowledge of the physics, and you’re no physicist. However, don’t lose hope. There are two simple tactics, non-technical tactics, to help with this verification business.

First – ask the engineers a simple question, “What conflict is eliminated with the new technology?” Good, innovative technologies eliminate fundamental, long standing conflicts. These long standing conflicts limit a technology in a way that is so fundamental engineers don’t even know they exist. When a fundamental conflict is eliminated, long held “design tradeoffs” no longer apply, and optimizing is replaced by maximizing. With optimizing, one aspect of the design is improved at the expense of another. With maximizing, both aspects of the design are improved without compromise. If the engineers cannot tell you about the conflict they’ve eliminated, your trust has not been sufficiently verified. Ask them to come back when they can answer your question.

Second – when they come back with their answer, it will be too complex to be understood, even by them. Tell them to come back when they can describe the conflict on a single page using a simple block diagram, where the blocks, labeled with everyday nouns, represent parts of the design intimately involved with the conflict, and the lines, labeled with everyday verbs, represent actions intimately involved with the conflict. If they can create a block diagram of the conflict, and it makes sense to you, your trust has been sufficiently verified. (For a post with a more detailed description of the block diagrams, click on “one page thinking” in the Category list.)

Though your engineers won’t like it at first, your two-pronged verification tactics will help them raise their game, which, in turn, will improve the risk/reward ratio of your innovation work.

Tools for innovation and breaking intellectual inertia

Everyone wants growth – but how? We know innovation is a key to growth, but how do we do it? Be creative, break the rules, think out of the box, think real hard, innovate. Those words don’t help me. What do I do differently after hearing them?

Everyone wants growth – but how? We know innovation is a key to growth, but how do we do it? Be creative, break the rules, think out of the box, think real hard, innovate. Those words don’t help me. What do I do differently after hearing them?

I am a process person, processes help me. Why not use a process to improve innovation? Try this: set up a meeting with your best innovators and use “process” and “innovation” in the same sentence. They’ll laugh you off as someone that doesn’t know the front of a cat from the back. Take your time to regroup after their snide comments and go back to your innovators. This time tell them how manufacturing has greatly improved productivity and quality using formalized processes. List them – lean, Six Sigma, DFSS, and DFMA. I’m sure they’ll recognize some of the letters. Now tell them you think a formalized process can improve innovation productivity and quality. After the vapor lock and brain cramp subsides, tell them there is a proven process for improved innovation.

A process for innovation? Is this guy for real? Innovation cannot be taught or represented by a process. Innovation requires individuality of thinking. It’s a given right of innovators to approach it as they wish, kind of like freedom of speech where any encroachment on freedom is a slippery slope to censorship and stifled thinking. A process restricts, it standardizes, it squeezes out creativity and reduces individual self worth. People are either born with the capability to innovate, or they are not. While I agree that some are better than others at creating new ideas, innovation does not have to be governed by hunch, experience and trial and error. Innovation does not have to be like buying lottery tickets. I have personal experience using a good process to help stack the odds in my favor and help me do better innovation. One important function of the innovation process is to break intellectual inertia.

Intellectual inertia must be overcome if real, meaningful innovation is to come about. When intellectual inertia reigns, yesterday’s thinking carries the day. Yesterday’s thinking has the momentum of a steam train puffing and bellowing down the tracks. This old train of thought can only follow a single path – the worn tracks of yesteryear, and few things are powerful enough to derail it. To misquote Einstein:

The thinking that got us into this mess is not the thinking that gets us out of it.

The notion of intellectual inertia is the opposite of Einstein’s thinking. The intellectual inertia mantra: the thinking that worked before is the thinking that will work again. But how to break the inertia? Read the rest of this entry »

Reducing the risk of Innovation

Though we can’t describe it in words, or tell someone how to do it, we all know innovation is good. Why is it good? Look at the causal chain of actions that create a good economy, and you’ll find innovation is the first link.

Though we can’t describe it in words, or tell someone how to do it, we all know innovation is good. Why is it good? Look at the causal chain of actions that create a good economy, and you’ll find innovation is the first link.

When innovation happens, a new product is created that does something that no other product has done before. It provides a new function, it has a new attribute that is pleasing to the eye, it makes a customer more money, or it simply makes a customer happy. It does not matter which itch it scratches, the important part is the customer finds it valuable, and is willing to pay hard currency for it. Innovation does something amazing, it results in a product that creates value; it creates something that’s worth more than the sum of its parts. Starting with things dug from the ground or picked from it – dirt (steel, aluminum, titanium), rocks (minerals/cement/ceramics), and sticks (wood, cotton, wool), and adding new thinking, a product is created, a product that customers pay money for, money that is greater than the cost of the dirt, rocks, sticks, and new thinking. This, my friends, is value creation, and this is what makes national economies grow sustainably. Here’s how it goes.

Customers value the new product highly, so much so that they buy boatloads of them. The company makes money, so much so stock price quadruples. With its newly-stuffed war chest, the company invests with confidence, doing more innovation, selling more products, and making more money. An important magazine writes about the company’s success, which causes more companies to innovate, sell, and invest. Before you know it, the economy is flooded with money, and we’re off to the races in a sustainable way – a way based on creating value. I know this sounds too simplistic. We’ve listened too long to the economists and their theories – spur demand, markets are efficient, and the world economy thing. This crap is worse than it sounds. Things don’t have to be so complicated. I wish economists weren’t so able to confuse themselves. Innovate, sell, and invest, that’s the ticket for me.

Innovation – straightforward, no, easy, no. Innovation is scary as hell because it’s risky as hell. The risk? A company tries to develop a highly innovative product, nothing comes out the innovation tailpipe, and the company has nothing for its investment. (I can never keep the finance stuff straight. Does zero return on a huge investment increase or decrease stock price?) It’s the tricky risk thing that gets in the way of innovation. If innovation was risk free, we’d all be doing it like voting in Chicago – early and often. But it’s not. Although there is a way to shift the risk/reward ratio in our favor.

After doing innovation wrong, learning, and doing it less wrong, I have found one thing that significantly and universally reduces the risk/reward ratio. What is it?

Know you’re working on the right problem.

Work on the right problem? Are you kidding? This is the magic advice? This is the best you’ve got? Yes.

If you think it’s easy to know you’re working on the right problem, you’ve never truly known you were working on the right problem, because this type of knowing is big medicine. Innovation is all about solving a special type of problem, problems caused by fundamental conflicts and contradictions, things that others don’t know exist, don’t know how to describe, or define, let alone know how to eliminate. I’m talking about conflicts and contradictions in the physics sense – where something must be hot and cold at the same time, something must be big while being small, black while white, hard one instant, and soft the next. Solve one of those babies, and you’ve innovated yourself a blockbuster product.

In order to know you’re working on the right problem (conflict or contradiction), the product is analyzed in the physics sense. What’s happening, why, where, when, how? It’s the rule (not the exception) that no one knows what’s really going on, they only think they do. Since the physics are unknown, a hypothesis of the physics behind the conflict/contradiction must be conjured and tested. The hypothesis must be tests analytically or in the lab. All this is done to define the problem, not solve it. To conjure correctly, a radical and seemingly inefficient activity must be undertaken. Engineers must sit at their desk and think about physics. This type of thinking is difficult enough on its own and almost impossible when project managers are screaming at them to get off their butts and fix the problem. As we know, thinking is not considered progress, only activity is.

After conjuring the hypothesis, it’s tested to prove or disprove. If dis-proven, back to the desk for more thinking. If proven, the conflict/contradiction behind the problem is defined, and you know you’re working on the right problem. You have not solved it, you’ve only convinced yourself you’re working on the right one. Now the problem can be solved.

Believe it or not, solving is the easy part. It’s easy because the physics of the problem are now known and have been verified in the lab. We engineers can solve physics problems once they’re defined because we know the rules. If we don’t know the physics rules off the top of our heads, our friends do. And for those tricky times, we can go to the internet and ask Google.

I know all this sounds strange. That’s okay, it is. But it’s also true. Give your engineers the tools, time and training to identify the problems, conflicts, and contradictions and innovation will follow. Remember the engineering paradox, sometimes slower is faster. And what about those tools for innovation? I’ll save them for another time.

Looking for the next evolution of lean? Look back.

Many have achieved great success with lean – it’s all over the web. Companies have done 5S, standard work, value stream mapping, and flow-pull-perfection. Waste in value streams has reduced from 95% to 80%, which is magical; productivity gains have been excellent; and costs have dropped dramatically. But the question on everyone’s mind – what’s next? The blogs, articles, and papers are speculating on the question and proposing theories, all of which have merit. But I think we’re asking the wrong question.

Many have achieved great success with lean – it’s all over the web. Companies have done 5S, standard work, value stream mapping, and flow-pull-perfection. Waste in value streams has reduced from 95% to 80%, which is magical; productivity gains have been excellent; and costs have dropped dramatically. But the question on everyone’s mind – what’s next? The blogs, articles, and papers are speculating on the question and proposing theories, all of which have merit. But I think we’re asking the wrong question.

Instead of looking forward for the next evolution of lean, we should look back. We must take a fundamental, base-level look at our factories, and ask what did we miss? We must de-evolve our thinking about our factories, and break down their DNA – like mapping the factory genome.

Though lean has achieved radical success, it has not achieved fundamental reduction in factory complexity. Heresy? Let me explain. Lean helped us migrate from batch building to single piece flow. With batch building, a group of parts are processed at machine A, then, when all are finished, the whole family moves to machine B. With single piece flow, a part is processed at machine A then she moves, without her sisters, directly to machine B, resulting in big savings. But in both cases, the fundamental part flow, a surrogate for factory complexity, remains unchanged – parts move from machine A to machine B. Lean did not change it. Lean has taken the bends out of our factory flow and squeezed machines together, but that’s continuous improvement. We’ve got good signals, we’ve got cell-based metrics, and 15 minute pitches. Again, continuous improvement. But what about discontinuous improvement? How can we fundamentally reduce factory complexity?

Factories are what they are because of the parts flowing through them.

Factory flow and complexity are governed by the genetics of the parts. In that way, parts are the building blocks of the factory genome. From the machines and tools to the people, handling equipment, and the incoming power – they’re all shaped by the parts’ genetics. Heavy parts, heavy duty cranes; complex parts, complex flows; big parts, big factories. When we want to make a fundamental change in bacteria to make a vaccine, we change the genetics. When we want to make a fruit immune to a natural enemy or resistant to cold of an unnatural habitat, we change the genetics. So, it follows, if fundamental change in factory complexity is the objective, the factory genome should change.

Don’t try to simplify the factory directly, change the parts to let the factory simplify itself.

Discontinuous reduction of factory complexity is the result of something – changing the products that flow through the factory. Only design engineers can do that. Only design engineers can eliminate features on the design so machine B is not required. Only design engineers can redesign the product to eliminate the part altogether – no more need for machine A or B. In both cases, the design engineer did what lean could not.

Lean is a powerful tool, and I’m an advocate. But we missed an important part of the lean family. We drove right by. We had the chance to engage the design community in lean, but we did not. Let’s get in the car, drive back to the design community, and pick them up. We’ll tell them anything they want to hear, just as long as they get in the car. Then, as fast as we can, we’ll drive them to the lean pool party. Because as Darwin knew, diversity is powerful, powerful enough to mutate lean into a strain that can help us survive in the future.

Engineers and Change?

As an engineering leader I work with design engineers every day. I like working with them, it’s fun. It’s comfortable for me because I understand us. Yes, I am an engineer.

As an engineering leader I work with design engineers every day. I like working with them, it’s fun. It’s comfortable for me because I understand us. Yes, I am an engineer.

I know what we’re good at, and I know we’re not good at. I’ve heard the jokes. Some funny, some not. But when engineers and non-engineers work well together, there’s lots of money to be made. I figure it’s time to explain how engineers tick so we can make more money. An engineer explaining engineers, to non-engineers – a flawed premise? Maybe, but I’ll roll the dice.

Everyone knows why design engineers are great to have around. Want a new product? Put some design engineers on it. Want to solve a tough technical problem? Put some design engineers on it. Want to create something from nothing? Design engineers. Everyone also knows we can be difficult to work with. (I know I can be.) How can we be high performing in some contexts and low performing in others? What causes the flip between modes? Understanding what’s behind this dichotomy is the key to understanding engineers. What’s behind this? In a word, “change”. And if you understand change from an engineer’s perspective, you understand engineers. If you remember just one sentence, here it is:

To engineers, change equals risk, and risk is bad.

Why do we think that way? Because that’s who we are; we’re walking risk reduction machines. And that’s good because in this time of doing more, doing it with less, and doing it faster, companies are taking more risk. Engineers make sure risk is always part of the risk-reward equation.

The best way to explain how engineers think about change and risk is to give examples. Here three examples.

Changing a drawing for manufacturing

Several months after product launch, with things running well, there is a request to change an engineering print. Change the print? That print is my recipe. I know how it works and when it doesn’t. That recipe works. My job is to make sure it works, and someone wants to change it? I’m not sure it will work. Did I tell you it’s my job to make sure it works? I don’t have time to test it thoroughly. Remember, when I say it will work, you expect that it will. I’m not sure the change will work. I don’t want to take the risk. Change is risk.

Changing the specification

This is a big one. Three months into a new product development project, the performance specification is changed, moving it north into unknown territory. The customer will benefit from the increased performance, we understand this, but the change created risk. The knowledge we created over the last three months may not be relevant, and we may have to recreate it. We want to meet the new specification (we’re passionate about product and technology), but we don’t know if we can. You count on us to be sure that things will work, and we pride ourselves on our ability to do that for you. But with the recent specification change, we’re not sure we can get it done. That’s risk, that’s uncomfortable for us, and that’s the reason we respond as we do to specification changes. Change is risk.

Changing how we do product development

This is the big one. We have our ways of doing things and we like them. Our design processes are linear, rational, and make sense (to us). We know what we can deliver when we follow our processes; we know about how long it will take; and we know the product will work when we’re done. Low risk. Why do you want us to change how we do things? Why do you want to add risk to our processes? All we’re trying to do is deliver a great product for you. Change is risk.

Engineers have a natural bias toward risk reduction. I am not rationalizing or criticizing, just explaining. We don’t expect zero risk; we know it’s about risk optimization and not risk minimization. But it’s important to keep your eye on us to make sure our risk pendulum does not swing too far toward minimization. The great American philosopher Mae West said, “Too much of a good thing can be wonderful.” But that’s not the case here.

When it comes to engineers and risk reduction, too much of a good thing is not wonderful.

Fasteners Can Consume 20-50% of Assembly Labor

The data-driven people in our lives tell us that you can’t improve what you can’t measure. I believe that. And it’s no different with product cost. Before improving product cost, before designing it out, you have to know where it is. However, it can be difficult to know what really creates cost. Not all parts and features are created equal; some create more cost than others, and it’s often unclear which are the heavy hitters. Sometimes the heavy hitters don’t look heavy, and often are buried deeply within the hidden factory.

The data-driven people in our lives tell us that you can’t improve what you can’t measure. I believe that. And it’s no different with product cost. Before improving product cost, before designing it out, you have to know where it is. However, it can be difficult to know what really creates cost. Not all parts and features are created equal; some create more cost than others, and it’s often unclear which are the heavy hitters. Sometimes the heavy hitters don’t look heavy, and often are buried deeply within the hidden factory.

Measure, measure, measure. That’s what the black belts say. However, it’s difficult to do well with product cost since our costing methods are hosed up and our measurement systems are limited. What do I mean? Consider fasteners (e.g., nuts, bolts, screws, and washers), the product’s most basic life form. Because fasteners are not on the BOM, they’re not part of product cost. Here’s the party line: it’s overhead to be shared evenly across all the products in a socialist way. That’s not a big deal, right? Wrong. Although fasteners don’t cost much in ones and twos, they do add up. 300-500 pieces per unit times the number of units per year makes for a lot of unallocated and untracked cost. However, a more significant issue with those little buggers is they take a lot of time attach to the product. For example, using standard time data from DFMA software, assembly of a 1/4″ nut with a bolt, locktite, a lockwasher, and cleanup takes 50 seconds. That’s a lot of time. You should be asking yourself what that translates to in your product. To figure it out, multiply the number nut/bolt/washer groupings by 50 seconds and multiply the result by the number of units per year. Actually, never mind. You can’t do the calculation because you don’t know the number of nut/bolt/washer combinations that are in your product. You could try to query your BOMs, but the information is likely not there. Remember, fasteners are overhead and not allocated to product. Have you ever tried to do a cost reduction project on overhead? It’s impossible. Because overhead inflicts pain evenly to all, no one is responsible to reduce it.

With fasteners, it’s like death by a thousand cuts.

The time to attach them can be as much as 20-50% of labor. That’s right, up to 50%. That’s like paying 20-50% of your folks to attach fasteners all day. That should make you sick. But it’s actually worse than that. From Line Design 101, the number of assembly stations is proportional to demand times labor time. Since fasteners inflate labor time, they also inflate the number of assembly stations, which, in turn, inflates the factory floor space needed to meet demand. Would you rather design out fasteners or add 15% to your floor space? I know you can get good deals on factory floor space due to the recession, but I’d still rather design out fasteners.

Even with the amount of assembly labor consumed by fasteners, our thinking and computer systems are blind to them and the associated follow-on costs. And because of our vision problems, the design community cannot be held accountable to design out those costs. We’ve given them the opportunity to play dumb and say things like, “Those fastener things are free. I’m not going to spend time worrying about that. It’s not part of the product cost.” Clearly not an enlightened statement, but it’s difficult to overcome without cost allocation data for the fasteners.

The work-around for our ailing thinking and computer-based cost tracking systems is simple: get the design engineers out to the production floor to build the product. Have them experience first hand how much waste is in the product. They’ll come back with a deep-in-the-gut understanding of how things really are. Then, have them use DFMA software to score the existing design, part-by-part, feature-by-feature. I guarantee everyone will know where the cost is after that. And once they know where the cost is, it will be easy for them to design it out.

I have data to support my assertion that fasteners can make up 20-50% of labor time, but don’t take my word for it. Go out to the factory floor, shut your eyes and listen. You’ll likely hear the never ending song of the nut runners. With each chirp, another nut is fastened to its bolt and washer, and another small bit of labor and factory floor space is consumed by the lowly fastener.

DFA and Lean – A Most Powerful One-Two Punch

Lean is all about parts. Don’t think so? What do your manufacturing processes make? Parts. What do your suppliers ship you? Parts. What do you put into inventory? Parts. What do your shelves hold? Parts. What is your supply chain all about? Parts.

Lean is all about parts. Don’t think so? What do your manufacturing processes make? Parts. What do your suppliers ship you? Parts. What do you put into inventory? Parts. What do your shelves hold? Parts. What is your supply chain all about? Parts.

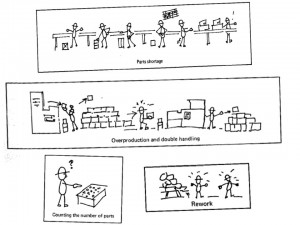

Still not convinced parts are the key? Take a look at the seven wastes and add “of parts” to the end of each one. Here is what it looks like:

- Waste of overproduction (of parts)

- Waste of time on hand – waiting (for parts)

- Waste in transportation (of parts)

- Waste of processing itself (of parts)

- Waste of stock on hand – inventory (of parts)

- Waste of movement (from parts)

- Waste of making defective products (made of parts)

And look at Suzaki’s cartoons. (Click them to enlarge.) What do you see? Parts.

Take out the parts and the waste is not reduced, it’s eliminated. Let’s do a thought experiment, and pretend your product had 50% fewer parts. (I know it’s a stretch.) What would your factory look like? How about your supply chain? There would be: fewer parts to ship, fewer to receive, fewer to move, fewer to store, fewer to handle, fewer opportunities to wait for late parts, and fewer opportunities for incorrect assembly. Loosen your thinking a bit more, and the benefits broaden: fewer suppliers, fewer supplier qualifications, fewer late payments; fewer supplier quality issues, and fewer expensive black belt projects. Most importantly, however, may be the reduction in the transactions, e.g., work in process tracking, labor reporting, material cost tracking, inventory control and valuation, BOMs, routings, backflushing, work orders, and engineering changes.

However, there is a big problem with the thought experiment — there is no one to design out the parts. Since company leadership does not thrust greatness on the design community, design engineers do not have to participate in lean. No one makes them do DFA-driven part count reduction to compliment lean. Don’t think you need the design community? Ask your best manufacturing engineer to write an engineering change to eliminates parts, and see where it goes — nowhere. No design engineer, no design change. No design change, no part elimination.

It’s staggering to think of the savings that would be achieved with the powerful pairing of DFA and lean. It would go like this: The design community would create a low waste design on which the lean community would squeeze out the remaining waste. It’s like the thought experiment; a new product with 50% fewer parts is given to the lean folks, and they lean out the low waste value stream from there. DFA and lean make such a powerful one-two punch because they hit both sides of the waste equation.

DFA eliminates parts, and lean reduces waste from the ones that remain.

There are no technical reasons that prevent DFA and lean from being done together, but there are real failure modes that get in the way. The failure modes are emotional, organizational, and cultural in nature, and are all about people. For example, shared responsibility for design and manufacturing typically resides in the organizational stratosphere – above the VP or Senior VP levels. And because of the failure modes’ nature (organizational, cultural), the countermeasures are largely company-specific.

What’s in the way of your company making the DFA/lean thought experiment a reality?

Mike Shipulski

Mike Shipulski