Archive for the ‘Product Robustness’ Category

How I Develop Engineering Leaders

For the past two decades, I’ve actively developed engineering leaders. A good friend asked me how I do it, so I took some time to write it down. Here is the curriculum in the form of How Tos:

For the past two decades, I’ve actively developed engineering leaders. A good friend asked me how I do it, so I took some time to write it down. Here is the curriculum in the form of How Tos:

How to build trust. This is the first thing. Always. Done right, the trust-based informal networks are stronger than the formal organization chart. Done right, the informal networks can protect the company from bad decisions. Done right, the right information flows among the right engineers at the right time so the right work happens in the right way.

How to decide what to do next. This is a broad one. We start with a series of questions: What are we doing now? What’s the problem? How do you know? What should we do more of? What should we do less of? What resources are available? When must we be done?

How to map the current state. We don’t define the idealized future state or the North Star, we start with what’s happening now. We make one-page maps of the territory. We use drawings, flow charts, boxes/arrows, and the fewest words. And we take no action before there’s agreement on how things are. The value of GPS isn’t to define your destination, it’s to establish your location. That’s why we map the current state.

How to build momentum. It’s easy to jump onto a moving steam train, but a stationary one is difficult to get moving. We define the active projects and ask – How might we hitch our wagon to a fast-moving train?

How to start something new. We start small and make a thought-provoking demo. The prototype forces us to think through all the elements, makes things real, and helps others understand the concept. If that doesn’t work, we start smaller.

How to define problems so we can solve them easily. We define problems with blocks and arrows, and limit ourselves to one page. The problem is defined as a region of contact between two things, and we identify it with the color red. That helps us know where the problem is and when it occurs. If there are two problems on a page, we break it up into two pages with one problem. Then we decide to solve the problem before, during, or after it occurs.

How to design products that work better and cost less. We create Pareto charts of the cost of the existing product (cost by subassembly and cost by part) and set a cost reduction goal. We create Pareto charts of the part count of the existing product (part count by subassembly and part count by individual part number) and define a goal for part count reduction. We define test protocols that capture the functionality customers care about. We test the existing product and set performance improvement goals for the new one. We test the new product using the same protocols and show the data in a simple A-B format. We present all this data at formal design reviews.

How to define technology projects. We define how the customer does their work. We then define the evolutionary history of our products and services, and project that history forward. For lines of goodness with trajectories that predict improvement, we run projects to improve them. For lines of goodness with stalled trajectories, we run projects to establish new technologies and jump to the next S-curve. We assess our offerings for completeness and create technologies to fill the gap.

How to file the right patents. We ask these questions: How quickly will the customer notice the new functionality or benefit? Once recognized, will they care? Will the patent protect high-volume / high-margin consumables? There are more questions, but these are the ones we start with. And the patent team is an integral part of the technology reviews and product development process.

How to do the learning. We start with the leader’s existing goals and deliverables and identify the necessary How Tos to get their work done. There are no special projects or extra work.

If you’re interested in learning more about the curriculum or how to enroll, send me an email mike@shipulski.com.

Image credit — Paul VanDerWerf

Resurrecting Manufacturing Through Product Simplification

Product simplification can radically improve profits and radically improve product robustness. Here’s a graph of profit per square foot ($/ft^2) which improved by a factor of seven and warranty cost per unit ($/unit), a measure of product robustness), which improved by a factor of four. The improvements are measured against the baseline data of the legacy product which was replaced by the simplified product. Design for Assembly (DFA) was used to simplify the product and Robust Design methods were used to reduce warranty cost per unit.

I will go on record that everyone will notice when profit per square foot increases by a factor of seven.

And I will also go on record that no one will believe you when you predict product simplification will radically improve profit per square foot.

And I will go on record that when warranty cost per unit is radically reduced, customers will notice. Simply put, the product doesn’t break and your customers love it.

But here’s the rub. The graph shows data over five years, which is a long time. And if the product development takes two years, that makes seven long years. And in today’s world, seven years is at least four too many. But take another look at the graph. Profit per square foot doubled in the first two years after launch. Two years isn’t too long to double profit per square foot. I don’t know of a faster way, More strongly, I don’t know of another way to get it done, regardless of the timeline.

I think your company would love to double the profit per square foot of its assembly area. And I’ve shown you the data that proves it’s possible. So, what’s in the way of giving it a try?

For the details about the work, here’s a link – Systematic DFMA Deployment, It Could Resurrect US Manufacturing.

The best time to design cost out of our products is now.

With inflation on the rise and sales on the decline, the time to reduce costs is now.

With inflation on the rise and sales on the decline, the time to reduce costs is now.

But before you can design out the cost you’ve got to know where it is. And the best way to do that is to create a Pareto chart that defines product cost for each subassembly, with the highest cost subassemblies on the left and the lowest cost on the right. Here’s a pro tip – Ignore the subassemblies on the right.

Use your costed Bill of Materials (BOMs) to create the Paretos. You’ll be told that the BOMs are wrong (and they are), but they are right enough to learn where the cost is.

For each of the highest-cost subassemblies, create a lower-level Pareto chat that sorts the cost of each piece-part from highest to lowest. The pro tip applies here, too – Ignore the parts on the right.

Because the design community designed in the cost, they are the ones who must design it out. And to help them prioritize the work, they should be the ones who create the Pareto charts from the BOMs. They won’t like this idea, but tell them they are the only ones who can secure the company’s future profits and buy them lots of pizza.

And when someone demands you reduce labor costs, don’t fall for it. Labor cost is about 5% of the product cost, so reducing it by half doesn’t get you much. Instead, make a Pareto chart of part count by subassembly. Focus the design effort on reducing the part count of subassemblies on the left. Pro tip – Ignore the subassemblies on the right. The labor time to assemble parts that you design out is zero, so when demand returns, you’ll be able to pump out more products without growing the footprint of the factory. But, more importantly, the cost of the parts you design out is also zero. Designing out the parts is the best way to reduce product costs.

Pro tip – Set a cost reduction goal of 35%. And when they complain, increase it to 40%.

In parallel to the design work to reduce part count and costs, design the test fixtures and test protocols you’ll use to make sure the new, lower-cost design outperforms the existing design. Certainly, with fewer parts, the new one will be more reliable. Pro tip – As soon as you can, test the existing design using the new protocols because the only way to know if the new one is better is to measure it against the test results of the old one.

And here’s the last pro tip – Start now.

Image credit — aisletwentytwo

Is the new one better than the old one?

Successful commercialization of products and services is fueled by one fundamental – making the new one better than the old one. If the new one is better the customer experience is better, the marketing is better, the sales are better and the profits are better.

Successful commercialization of products and services is fueled by one fundamental – making the new one better than the old one. If the new one is better the customer experience is better, the marketing is better, the sales are better and the profits are better.

It’s not enough to know in your heart that the new one is better, there’s got to be objective evidence that demonstrates the improvement. The only way to do that is with testing. There are a number of types testing mechanisms, but whether it’s surveys, interviews or in-the-lab experiments, test results must be quantifiable and repeatable.

The best way I know to determine if the new one is better than the old one is to test both populations with the same test protocol done on the same test setup and measure the results (in a quantified way) using the same measurement system. Sounds easy, but it’s not. The biggest mistake is the confusion between the “same” test conditions and “almost the same” test conditions. If the test protocol is slightly different there’s no way to tell if the difference between new and old is due to goodness of the new design or the badness of the test setup. This type of uncertainty won’t cut it.

You can never be 100% sure that new one is better than the old one, but that’s were statistics come in handy. Without getting deep into the statistics, here’s how it goes. For both population’s test results the mean and standard deviation (spread) are calculated, and taking into consideration the sample size of the test results, the statistical test will tell you if they’re different and confidence of it’s discernment.

The statistical calculations (Student’s t-test) aren’t all that important, what’s important is to understand the implications of the calculations. When there’s a small difference between new and old, the sample size must be large for the statistics to recognize a difference. When the difference between populations is huge, a sample size of one will do nicely. When the spread of the data within a population is large, the statistics need a large sample size or it can’t tell new from old. But when the data is tight, they can see more clearly and need fewer samples to see a difference.

If marketing claims are based on large sample sizes, the difference between new and old is small. (No one uses large sample sizes unless they have to because they’re expensive.) But if in a design review for the new product the sample size is three and the statistical confidence is 95%, new is far better than old. If the average of new is much larger than the average of old and the sample size is large yet the confidence is low, the statistics know the there’s a lot of variability within the populations. (A visual check should show the distributions to more wide than tall.)

The measurement systems used in the experiments can give a good indication of the difference between new and old. If the measurement system is expensive and complicated, likely the difference between new and old is small. Like with large sample sizes, the only time to use an expensive measurement system is when it is needed. And when the difference between new and old is small, the expensive measurement system’s ability accurately and repeatably measure small differences (micrometers vs. meters).

If you need large sample sizes, expensive measurement systems and complicated statistical analyses, the new one isn’t all that different from the old one. And when that’s the case, your new profits will be much like your old ones. But if your naked eye can see the difference with a back-to-back comparison using a sample size of one, you’re on to something.

Image credit – amanda tipton

Creating a brand that lasts.

One of the best ways to improve your brand is to improve your products. The most common way is to provide more goodness for less cost – think miles per gallon. Usually it’s a straightforward battle between market leaders, where one claims quantifiable benefit over the other – Ours gets 40 mpg and theirs doesn’t. And the numbers are tied to fully defined test protocols and testing agencies to bolster credibility. Here’s the data. Buy ours

One of the best ways to improve your brand is to improve your products. The most common way is to provide more goodness for less cost – think miles per gallon. Usually it’s a straightforward battle between market leaders, where one claims quantifiable benefit over the other – Ours gets 40 mpg and theirs doesn’t. And the numbers are tied to fully defined test protocols and testing agencies to bolster credibility. Here’s the data. Buy ours

But there’s a more powerful way to improve your brand, and that’s to map your products to reliability. It’s far a more difficult game than the quantified head-to-head comparison of fuel economy and it’s a longer play, but done right, it’s a lasting play that is difficult to beat. Run the thought experiment: think about the brands you associate with reliability. The brands that come to mind are strong, lasting brands, brands with staying power, brands whose products you want to buy, brands you don’t want to compete against. When you buy their products you know what you’re going to get. Your friends tell you stories about their products.

There’s a complete a complete tool set to create products that map to reliability, and they work. But to work them, the commercialization team has to have the right mindset. The team must have the patience to formally define how all the systems work and how they interact. (Sounds easy, but it can be painfully time consuming and the level of detail is excruciatingly extreme.) And they have to be willing to work through the discomfort or developing a common understanding how things actually work. (Sounds like this shouldn’t be an issue, but it is – at the start, everyone has a different idea on how the system works.) But more importantly, they’ve got to get over the natural tendency to blame the customer for using the product incorrectly and learn to design for unintended use.

The team has got to embrace the idea that the product must be designed for use in unpredictable ways in uncontrolled conditions. Where most teams want to narrow the inputs, this team designs for a wider range of inputs. Where it’s natural to tighten the inputs, this team designs the product to handle a broader set of inputs. Instead of assuming everything will work as intended, the team must assume things won’t work as intended (if at all) and redesign the product so it’s insensitive to things not going as planned. It’s strange, but the team has to design for hypothetical situations and potential problems. And more strangely, it’s not enough to design for potential problems the team knows about, they’ve got to design for potential problems they don’t know about. (That’s not a typo. The team must design for failure modes it doesn’t know about.)

How does a team design for failure modes it doesn’t know about? They build a computer-based behavioral model of the system, right down to the nuts, bolts and washers, and they create inputs that represent the environment around the system. They define what each element does and how it connects to the others in the system, capturing the governing physics and propagation paths of connections. Then they purposefully break the functions using various classes of failure types, run the analysis and review the potential causes. Or, in the reverse direction, the team perturbs the system’s elements with inputs and, as the inputs ripple through the design, they find previously unknown undesirable (harmful) functions.

Purposefully breaking the functions in known ways creates previously unknown potential failure causes. The physics-based characterization and the interconnection (interaction) of the system elements generate unpredicted potential failure causes that can be eliminated through design. In that way, the software model helps find potential failures the team did not know about. And, purposefully changing inputs to the system, again through the physics and interconnection of the elements, generates previously unknown harmful functions that can be designed out of the product.

If you care about the long-term staying power of your brand, you may want to take a look at TechScan, the software tool that makes all this possible.

Image credit — Chris Ford.

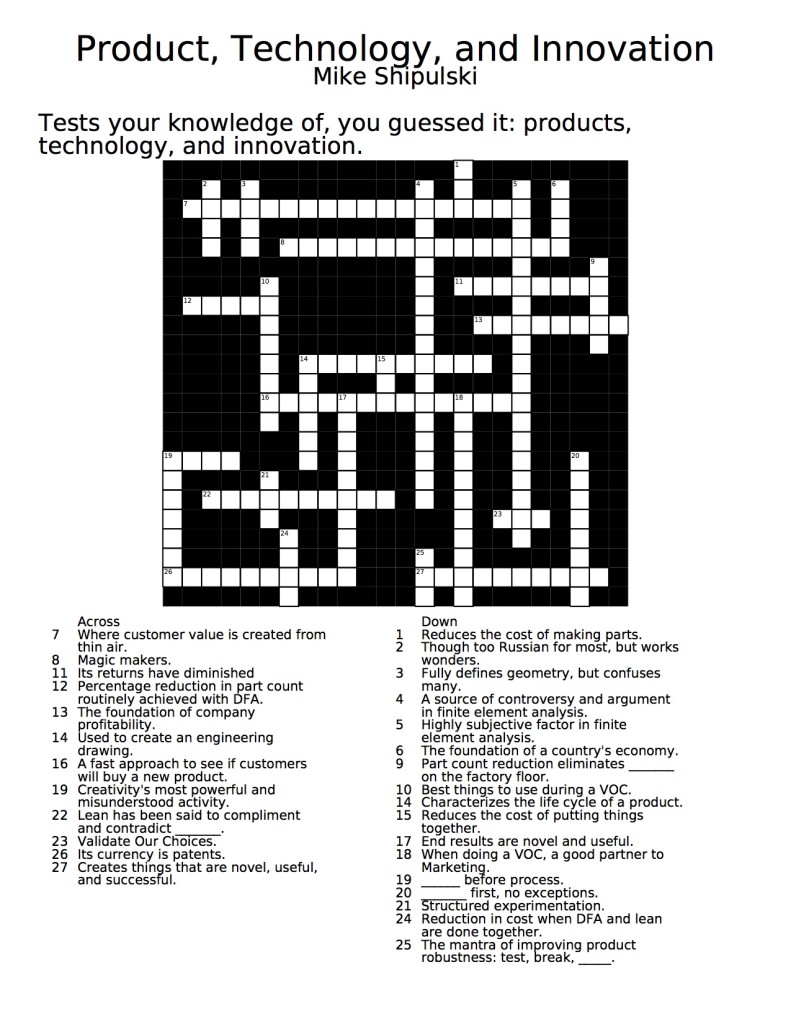

Crossword Puzzle – Product, Technology, Innovation

Here’s something a little different – a crossword puzzle to test your knowledge on products, technology, and innovation. Complete the puzzle using the image below, or download it (and answer key) using the green arrows below, and take your time with it over the Thanksgiving holiday.

[wpdm_file id=5]

[wpdm_file id=4]

Product Thinking

Product costs, without product thinking, drop 2% per year. With product thinking, product costs fall by 50%, and while your competitors’ profit margins drift downward, yours are too high to track by conventional methods. And your company is known for unending increases in stock price and long term investment in all the things that secure the future.

The supply chain, without product thinking, improves 3% per year. With product thinking, longest lead processes are eliminated, poorest yield processes are a thing of the past, problem suppliers are gone, and your distributers associate your brand with uninterrupted supply and on time delivery.

Product robustness, without product thinking, is the same year-on-year. Re-injecting long forgotten product thinking to simplify the product, product robustness jumps to unattainable levels and warranty costs plummet. And your brand is known for products that simply don’t break.

Rolled throughput yield is stalled at 90%. With product thinking, the product is simplified, opportunities for defects are reduced, and throughput skyrockets due to improved RTY. And your brand is known as a good value – providing good, repeatable functionality at a good price.

Lean, without product thinking has delivered wonderful results, but the low hanging fruit is gone and lean is moving into the back office. With product thinking, the design is changed and value-added work is eliminated along with its associated non-value added work (which is about 8 times bigger); manufacturing monuments with their long changeover times are ripped out and sold to your competitors; work from two factories is consolidated into one; new work is taken on to fill the emptied factories; and profit per square foot triples. And your brand is known for best-in-class quality, unbeatable on time delivery, world class performance, and pioneering the next generation of lean.

The sales argument is low price and good payment terms. With product thinking, the argument starts with product performance and ends with product reliability. The sales team is energized, and your brand is linked with solid products that just plain work.

The marketing approach is stickers and new packaging. With product thinking, it’s based on competitive advantage explained in terms of head-to-head performance data and a richer feature set. And your brand stands for winning technology and killer products.

Product thinking isn’t for everyone. But for those that try – your brand will thank you.

How to help engineers do new.

Creating new products that provide a useful function is hard, and insuring they function day-in and day-out is harder. Plain and simple, engineering is hard.

Creating new products that provide a useful function is hard, and insuring they function day-in and day-out is harder. Plain and simple, engineering is hard.

Planes must fly, cars must steer, and Velcro must stick. But, at every turn, there are risks, reasons why a new design won’t work, and it’s the engineer’s job to make the design insensitive to these risks. (Called reducing signal to noise ratio in some circles.) At a fundamental level engineering is about safety, and at a higher level it’s about sales – no function, no sales.

That’s why at every opportunity engineers reduce risk . (And thank goodness we do.) It makes sense that we’re the ones that think things through to the smallest detail, that can’t move on until we have the answer, that ask odd questions that seem irrelevant. It all makes sense since we’re the ones responsible if the risks become reality. We’re the ones that bear ultimate responsibility for product function and safety, and, thankfully, it shapes us.

But there’s a dark side to this risk reduction mindset – where we block our thinking, where we don’t try something new because of problems we think we may have, problems we don’t have yet. The cause of this innovation-limiting behavior: problem broadening, where we apply a thick layer of problem over the entirety of a new concept, and declare it unworkable. Truth is, we don’t understand things well enough to make that declaration, but, in a knee-jerk way, we misapply our natural risk reduction mindset. Clearly, problems exist when doing new, but real problems are not broad, real problems are not like peanut butter and jelly spread evenly across the whole sandwich. Real problems are narrow; real problems are localized, like getting a drip of jelly on your new shirt.

How to get the best of both worlds? How to embrace the risk reduction mindset so products are safe and help engineering folks to try something radically new? To innovate?

We’ve got the risk reduction world covered, so it’s all about enhancing the try-something-new side. To do this we need to combat problem broadening; we need a process for problem narrowing. With problem narrowing, engineers drill down until the problem is defined as the interaction of two elements (the jelly and your shirt), defined in space (the front of your shirt) and time (when the knife drops a dollop on your shirt). Where problem broadening tells us to avoid making peanut butter and jelly sandwiches altogether (those sandwiches will always dirty our shirts), problem narrowing tells use to put something between the knife and the front of your shirt, or to put on your new shirt after you make your sandwich, or to do something creative to keep the jelly away from our shirt.

Problems narrow as knowledge deepens. Work through your fears, try something new, and advance your knowledge. Then define your problems narrowly, and solve them.

Innovate.

Engineering’s Contribution to the Profit Equation

We all want to increase profits, but sometimes we get caught in the details and miss the big picture:

We all want to increase profits, but sometimes we get caught in the details and miss the big picture:

Profit = (Price – Cost) x Volume.

It’s a simple formula, but it provides a framework to focus on fundamentals. While all parts of the organization contribute to profit in their own way, engineering’s work has a surprisingly broad impact on the equation.

The market sets price, but engineering creates function, and improved function increases the price the market will pay. Design the product to do more, and do it better, and customers will pay more. What’s missing for engineering is an objective measure of what is good to the customer.

Pushing on Engineering

With manufacturing change is easy – lean this, six sigma that, more with less year-on-year. With engineering, not so much. Why?

With manufacturing change is easy – lean this, six sigma that, more with less year-on-year. With engineering, not so much. Why?

Manufacturing is about cost, waste, efficiency, and yield (how to make it), and engineering is about function (what it does) – fundamental differences but not the why. The consequence of failure is the why. If manufacturing doesn’t deliver, the product is made like last year (with a bit more waste and cost than planned), but the product still sells. With engineering, not so much. If engineering mistakenly designs the Fris out of the Frisbee or the Hula out of the Hoop, no sales. That’s the why.

No function, no sales, no company, this is fear. This is why it feels dangerous to push on engineering; push on engineering and the wheels may fall off. This why the organization treads lightly; this is why the CEO does not push.

As technical leaders we are the ones who push directly on engineers. We stretch them to create novel technology that creates customer value and drive sales. (If, of course, customers value the technology.) We spend our days in the domain of stress, strain, printed circuit boards, programming languages, thermal models, and egos. As technologists, it’s daunting to push effectively on engineering; as non-technologists even more. How can a CEO do it without the subject matter expertise? The answer is one-page thinking.

One-page thinking forces engineers to describe our work in plain English, simple English, simple language, pictures, images. This cuts clutter and cleans our thinking so non-technologists can understand what’s happening, what’s going on, what we’re thinking, and shape us in the direction of customer, of market, of sales. The result is a hybrid of strong technology, strong technical thinking, and strong product, all with a customer focus, a market focus. A winning combination.

There are several rules to one-page thinking, but start with this one:

Use one page.

As CEO, ask your technical leaders (even the VP or SVP kind) to define each of their product development (or technology) projects on one page, but don’t tell them how. (The struggle creates learning.) When they come back with fifteen PowerPoint slides (a nice reduction from fifty), read just the first one, and send them away. When they come back with five, just read the first. They’ll get the idea. But be patient. To use just one page makes things remarkably clear, but it’s remarkably difficult.

Once the new product (or technology) is defined on one page, it’s time to reduce the fear of pushing on engineering – one-page thinking at the problem level. First, ask the technical leaders for a one-page description of each problem that must be overcome (one page per problem and address only the fundamental problems). Next, for each problem ask for baseline data (test data) on the product you make today. (For each problem they’ll likely have to create a robustness surrogate, a test rig to evaluate product performance.) The problem is solved (and the product will function well) when the new one out-performs the old one. The fear is gone.

When your engineers don’t understand, they can’t explain things on one page. But when they can, you understand.

Improve the US economy, one company at a time.

I think we can turn around the US economy, one company at a time. Here’s how:

I think we can turn around the US economy, one company at a time. Here’s how:

To start, we must make a couple commitments to ourselves. 1. We will do what it takes to manufacture products in the US because it’s right for the country. 2. We will be more profitable because of it.

Next, we will set up a meeting with our engineering community, and we will tell them about the two commitments. (We will wear earplugs because the cheering will be overwhelming.) Then, we will throw down the gauntlet; we will tell them that, going forward, it’s no longer acceptable to design products as before, that going forward the mantra is: half the cost, half the parts, half the time. Then we will describe the plan.

On the next new product we will define cost, part count, and assembly time goals 50% less that the existing product; we will train the team on DFMA; we will tear apart the existing product and use the toolset; we will learn where the cost is (so we can design it out); we will learn where the parts are (so we can design them out); we will learn where the assembly time is (so we can design it out).

On the next new product we will front load the engineering work; we will spend the needed time to do the up-front thinking; we will analyze; we will examine; we will weigh options; we will understand our designs. This time we will not just talk about the right work, this time we will do it.

On the next new product we will use our design reviews to hold ourselves accountable to the 50% reductions, to the investment in DFMA tools, to the training plan, to the front-loaded engineering work, to our commitment to our profitability and our country.

On the next new product we will celebrate the success of improved product functionality, improved product robustness, a tighter, more predictable supply chain, increased sales, increased profits, and increased US manufacturing jobs.

On the next new product we will do what it takes to manufacture products in the US because it’s the right thing for the country, and we will be more profitable because of it.

If you’d like some help improving the US economy one company at a time, send me an email (mike@shipulski.com), and I’ll help you put a plan together.

a

p.s. I’m holding a half-day workshop on how to implement systematic cost savings through product design on June 13 in Providence RI as part of the International Forum on DFMA — here’s the link. I hope to see you there.

Mike Shipulski

Mike Shipulski