Archive for the ‘Complexity’ Category

Progress is powered by people.

People ask why.

People buy products from people.

The right people turn activity into progress.

People want to make a difference, and they do.

People have biases which bring a richer understanding.

People use judgement – that’s why robots don’t run projects.

People recognize when the rules don’t apply and act accordingly.

Business models are an interconnected collection of people processes.

The simplest processes require judgement, that’s why they’re run by people.

People don’t like good service, they like effective interaction with other people.

People are the power behind the tools. (I never met a hammer that swung itself.)

Progress is powered by people.

Image credit – las – intially

Dissent Without Reprisal – a key to company longevity

In strategic planning there’s a strong forcing function that causes the organization to converge on a singular, company-wide approach. While this convergence can be helpful, when it’s force is absolute it stifles new ideas. The result is an operating plan that incrementally improves on last year’s work at the expense of work that creates new businesses, sells to new customers and guards against the dark forces of disruptive competition. In times of change convergence must be tempered to yield a bit of diversity in the approach. But for diversity to make it into the strategic plan, dissent must be an integral (and accepted) part of the planning process. And to inject meaningful diversity the dissenting voice must be as load as the voice of convergence.

In strategic planning there’s a strong forcing function that causes the organization to converge on a singular, company-wide approach. While this convergence can be helpful, when it’s force is absolute it stifles new ideas. The result is an operating plan that incrementally improves on last year’s work at the expense of work that creates new businesses, sells to new customers and guards against the dark forces of disruptive competition. In times of change convergence must be tempered to yield a bit of diversity in the approach. But for diversity to make it into the strategic plan, dissent must be an integral (and accepted) part of the planning process. And to inject meaningful diversity the dissenting voice must be as load as the voice of convergence.

It’s relatively easy for an organization to come to consensus on an idea that has little uncertainty and marginal upside. But there can be no consensus, but on an idea with a high degree of uncertainty even if the upside is monumental. If there’s a choice between minimizing uncertainty and creating something altogether new, the strategic process is fundamentally flawed because the planning group will always minimize uncertainty. Organizationally we are set up to deliver certainty, to make our metrics and meet our timelines. We have an organizational aversion to uncertainty, and, therefore, our organizational genetics demand we say no to ideas that create new business models, new markets and new customers. What’s missing is the organizational forcing function to counterbalance our aversion to uncertainty with a healthy grasping of it. If the company is to survive over the next 20 years, uncertainty must be injected into our organizational DNA. Organizationally, companies must be restructured to eliminate the choice between work that improves existing products/services and work that creates altogether new markets, customers, products and services.

When Congress or the President wants to push their agenda in a way that is not in the best long term interest of the country, no one within the party wants to be the dissenting voice. Even if the dissenting voice is right and Congress and the President are wrong, the political (career) implications of dissent within the party are too severe. And, organizationally, that’s why there’s a third branch of government that’s separate from the other two. More specifically, that’s why Justices of the Supreme Court are appointed for life. With lifetime appointments their dissenting voice can stand toe-to-toe with the voice of presidential and congressional convergence. Somehow, for long-term survival, companies must find a way to emulate that separation of power and protect the work with high uncertainty just as the Justices protect the law.

The best way I know to protect work with high uncertainty is to create separate organizations with separate strategic plans, operating plans and budgets. In that way, it’s never a decision between incremental improvement and discontinuous improvement. The decision becomes two separate decisions for two separate teams: Of the candidate projects for incremental improvement, which will be part of team A’s plan? And, of the candidate projects for discontinuous improvement, which will become part of team B’s plan?

But this doesn’t solve the whole challenge because at the highest organizational level, the level that sits above Team A and B, the organizational mechanism for dissent is missing. At this highest level there must be healthy dissent by the board of directors. Meaningful dissent requires deep understanding of the company’s market position, competitive landscape, organizational capability and capacity, the leading technology within the industry (the level, completeness and maturity), the leading technologies in adjacent industries and technologies that transcend industries (i.e., digital). But the trouble is board members cannot spend the time needed to create deep understanding required to formulate meaningful dissent. Yes, organizationally the board of directors can dissent without reprisal, but they don’t know the business well enough to dissent in the most meaningful way.

In medieval times the jester was an important player in the organization. He entertained the court but he also played the role of the dissenter. Organizationally, because the king and queen expected the jester to demonstrate his sharp wit, he could poke fun at them when their ideas didn’t hang together. He could facilitate dissent with a humorous play on a deadly serious topic. It was delicate work, as one step too far and the jester was no more. To strike the right balance the jester developed deep knowledge of the king, queen and major players in the court. And he had to know how to recognize when it was time to dissent and when it was time to keep his mouth shut. The jester had the confidence of the court, knew the history and could see invisible political forces at play. The jester had the organizational responsibility to dissent and the deep knowledge to do it in a meaningful way.

Companies don’t need a jester, but they do need a T-shaped person with broad experience, deep knowledge and the organizational status to dissent without reprisal. Maybe this is a full time board member or a hired gun that works for the board (or CEO?), but either way they are incentivized to dissent in a meaningful way.

I don’t know what to call this new role, but I do know it’s an important one.

Image credit – Will Montague

If you don’t know the critical path, you don’t know very much.

Once you have a project to work on, it’s always a challenge to choose the first task. And once finished with the first task, the next hardest thing is to figure out the next next task.

Once you have a project to work on, it’s always a challenge to choose the first task. And once finished with the first task, the next hardest thing is to figure out the next next task.

Two words to live by: Critical Path.

By definition, the next task to work on is the next task on the critical path. How do you tell if the task is on the critical path? When you are late by one day on a critical path task, the project, as a whole, will finish a day late. If you are late by one day and the project won’t be delayed, the task is not on the critical path and you shouldn’t work on it.

Rule 1: If you can’t work the critical path, don’t work on anything.

Working on a non-critical path task is worse than working on nothing. Working on a non-critical path task is like waiting with perspiration. It’s worse than activity without progress. Resources are consumed on unnecessary tasks and the resulting work creates extra constraints on future work, all in the name of leveraging the work you shouldn’t have done in the first place.

How to spot the critical path? If a similar project has been done before, ask the project manager what the critical path was for that project. Then listen, because that’s the critical path. If your project is similar to a previous project except with some incremental newness, the newness is on the critical path.

Rule 2: Newness, by definition, is on the critical path.

But as the level of newness increases, it’s more difficult for project managers to tell the critical path from work that should wait. If you’re the right project manager, even for projects with significant newness, you are able to feel the critical path in your chest. When you’re the right project manager, you can walk through the cubicles and your body is drawn to the critical path like a divining rod. When you’re the right project manager and someone in another building is late on their critical path task, you somehow unknowingly end up getting a haircut at the same time and offering them the resources they need to get back on track. When you’re the right project manager, the universe notifies you when the critical path has gone critical.

Rule 3: The only way to be the right project manager is to run a lot of projects and read a lot. (I prefer historical fiction and biographies.)

Not all newness is created equal. If the project won’t launch unless the newness is wrestled to the ground, that’s level 5 newness. Stop everything, clear the decks, and get after it until it succumbs to your diligence. If the product won’t sell without the newness, that’s level 5 and you should behave accordingly. If the newness causes the product to cost a bit more than expected, but the project will still sell like nobody’s business, that’s level 2. Launch it and cost reduce it later. If no one will notice if the newness doesn’t make it into the product, that’s level 0 newness. (Actually, it’s not newness at all, it’s unneeded complexity.) Don’t put in the product and don’t bother telling anyone.

Rule 4: The newness you’re afraid of isn’t the newness you should be afraid of.

A good project plan starts with a good understanding of the newness. Then, the right project work is defined to make sure the newness gets the attention it deserves. The problem isn’t the newness you know, the problem is the unknown consequence of newness as it ripples through the commercialization engine. New product functionality gets engineering attention until it’s run to ground. But what if the newness ripples into new materials that can’t be made or new assembly methods that don’t exist? What if the new materials are banned substances? What if your multi-million dollar test stations don’t have the capability to accommodate the new functionality? What if the value proposition is new and your sales team doesn’t know how to sell it? What if the newness requires a new distribution channel you don’t have? What if your service organization doesn’t have the ability to diagnose a failure of the new newness?

Rule 5: The only way to develop the capability to handle newness is to pair a soon-to-be great project manager with an already great project manager.

It may sound like an inefficient way to solve the problem, but pairing the two project managers is a lot more efficient than letting a soon-to-be great project manager crash and burn. After an inexperienced project manager runs a project into the ground, what’s the first thing you do? You bring in a great project manager to get the project back on track and keep them in the saddle until the product launches. Why not assume the wheels will fall off unless you put a pro alongside the high potential talent?

Rule 6: When your best project managers tell you they need resources, give them what they ask for.

If you want to deliver new value to new customs there’s no better way than to develop good project managers. A good project manager instinctively knows the critical path; they know how the work is done; they know to unwind situations that needs to be unwound; they have the personal relationships to get things done when no one else can; because they are trusted, they can get people to bend (and sometimes break) the rules and feel good doing it; and they know what they need to successfully launch the product.

If you don’t know your critical path, you don’t know very much. And if your project managers don’t know the critical path, you should stop what you’re doing, pull hard on the emergency break with both hands and don’t release it until you know they know.

Image credit – Patrick Emerson

Organized For Uncertainty

There are many different organizational structures, each with its unique set of strengths and weaknesses. The top-down organization has its strong alignment and limited flexibility while the bottom-up has its empowering consensus and sloth-like pace. Which one’s better? Well, it depends.

There are many different organizational structures, each with its unique set of strengths and weaknesses. The top-down organization has its strong alignment and limited flexibility while the bottom-up has its empowering consensus and sloth-like pace. Which one’s better? Well, it depends.

The function-based organization has strong subject matter expertise and weak cross-function coordination, while the business unit-based organization knows its product, market and customers but has difficulty working east-west across product families and customer segments. Is one better than the other? Same answer- it depends.

The matrix organization has the best of both worlds – business unit and functional – and isn’t particularly good at either. And there’s the ambidextrous organization that I don’t pretend to understand. If I had to choose one, which would I choose? It depends.

The best organizational structure depends on what you’re trying to do, depends on the environmental context, depends on the organization’s history and biases and the general state of organizational capability, capacity and profitability. But that’s not the whole picture because none of this is static. All of this changes over time and it changes in an unpredictable way. Because the best organizational structure depends on all these complicating factors and the factors change over time, there is never a “best” organizational structure.

Constant change has always been the dominant fundamental perturbing and disturbing our organizational structures. But, as competition turns up the wick and the pace of learning builds geometrically, change’s ability to influence our organizational structures has grown from disturbing to dismantling.

Change is the dominant fundamental, but its real power comes from the uncertainty it brings to the party. Our tired, old organizational structures were designed to survive in a long-dead era of glacial change and rationed uncertainty. And though our organizational structures were built in granite, the elevated sea levels of uncertainty are creating fissures in our inflexible organizational structures and profitability is leaking from all levels

If uncertainty is the disease, adaptability is the antidote. The organization must continually monitor its environment for changes. And when it senses an emerging shift, the organization it must move resources in a way that satisfies the new reality. The organization structure shifts to fit the work. The structure changes as the character of the projects change. The organizational structure never reaches equilibrium; it survives through continual evolutionary loop of sense-change-sense.

I don’t have a name for an organization like this, and I think it’s best not to name it. Instead, I think it’s best to describe how it behaves. It’s a living organization that behaves like a living organism. It wants to survive, so it changes itself based on changes in its environment. It’s an organization that self organizes.

Directionally, organizational structures should be less static and more dynamic, and they should evolve to fit the work. The difficult part is how to define the explicit rules on how it should change, when it should change and how it decides. But it’s more than difficult to describe explicit rules, it’s impossible. In domains of high levels of uncertainty there can be no predictability and without predictability a finite set of explicit rules will not work. The DNA of this living organization is implicit knowledge, evolutionary experimentation and personal judgement.

I’m not sure what to call this type of organizational structure, and I’m not exactly sure how to create one. But it sure sounds like a lot of fun.

Image credit — actor212

Creating a brand that lasts.

One of the best ways to improve your brand is to improve your products. The most common way is to provide more goodness for less cost – think miles per gallon. Usually it’s a straightforward battle between market leaders, where one claims quantifiable benefit over the other – Ours gets 40 mpg and theirs doesn’t. And the numbers are tied to fully defined test protocols and testing agencies to bolster credibility. Here’s the data. Buy ours

One of the best ways to improve your brand is to improve your products. The most common way is to provide more goodness for less cost – think miles per gallon. Usually it’s a straightforward battle between market leaders, where one claims quantifiable benefit over the other – Ours gets 40 mpg and theirs doesn’t. And the numbers are tied to fully defined test protocols and testing agencies to bolster credibility. Here’s the data. Buy ours

But there’s a more powerful way to improve your brand, and that’s to map your products to reliability. It’s far a more difficult game than the quantified head-to-head comparison of fuel economy and it’s a longer play, but done right, it’s a lasting play that is difficult to beat. Run the thought experiment: think about the brands you associate with reliability. The brands that come to mind are strong, lasting brands, brands with staying power, brands whose products you want to buy, brands you don’t want to compete against. When you buy their products you know what you’re going to get. Your friends tell you stories about their products.

There’s a complete a complete tool set to create products that map to reliability, and they work. But to work them, the commercialization team has to have the right mindset. The team must have the patience to formally define how all the systems work and how they interact. (Sounds easy, but it can be painfully time consuming and the level of detail is excruciatingly extreme.) And they have to be willing to work through the discomfort or developing a common understanding how things actually work. (Sounds like this shouldn’t be an issue, but it is – at the start, everyone has a different idea on how the system works.) But more importantly, they’ve got to get over the natural tendency to blame the customer for using the product incorrectly and learn to design for unintended use.

The team has got to embrace the idea that the product must be designed for use in unpredictable ways in uncontrolled conditions. Where most teams want to narrow the inputs, this team designs for a wider range of inputs. Where it’s natural to tighten the inputs, this team designs the product to handle a broader set of inputs. Instead of assuming everything will work as intended, the team must assume things won’t work as intended (if at all) and redesign the product so it’s insensitive to things not going as planned. It’s strange, but the team has to design for hypothetical situations and potential problems. And more strangely, it’s not enough to design for potential problems the team knows about, they’ve got to design for potential problems they don’t know about. (That’s not a typo. The team must design for failure modes it doesn’t know about.)

How does a team design for failure modes it doesn’t know about? They build a computer-based behavioral model of the system, right down to the nuts, bolts and washers, and they create inputs that represent the environment around the system. They define what each element does and how it connects to the others in the system, capturing the governing physics and propagation paths of connections. Then they purposefully break the functions using various classes of failure types, run the analysis and review the potential causes. Or, in the reverse direction, the team perturbs the system’s elements with inputs and, as the inputs ripple through the design, they find previously unknown undesirable (harmful) functions.

Purposefully breaking the functions in known ways creates previously unknown potential failure causes. The physics-based characterization and the interconnection (interaction) of the system elements generate unpredicted potential failure causes that can be eliminated through design. In that way, the software model helps find potential failures the team did not know about. And, purposefully changing inputs to the system, again through the physics and interconnection of the elements, generates previously unknown harmful functions that can be designed out of the product.

If you care about the long-term staying power of your brand, you may want to take a look at TechScan, the software tool that makes all this possible.

Image credit — Chris Ford.

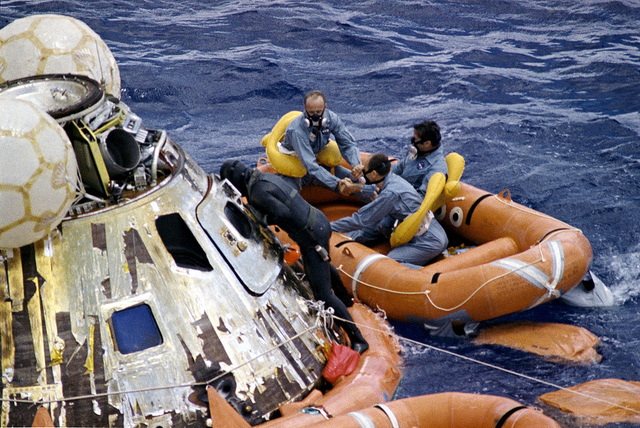

A Life Boat in the Sea of Uncertainty

Work is never perfect, family life is never perfect and neither is the interaction between them. Regardless of your expectations or control strategies, things go as they go. That’s just what they do.

Work is never perfect, family life is never perfect and neither is the interaction between them. Regardless of your expectations or control strategies, things go as they go. That’s just what they do.

We have far less control than we think. In the pure domain of physics the equations govern predictively – perturb the system with a known input in a controlled way and the output is predictable. When the process is followed, the experimental results repeat, and that’s the acid test. From a control standpoint this is as good as it gets. But even this level of control is more limited than it appears.

Physical laws have bounded applicability – change the inputs a little and the equation may not apply in the same way, if at all. Same goes for the environment. What at the surface looks controllable and predictable, may not be. When the inputs change, all bets are off – the experimental results from one test condition may not be predictive in another, even for the simplest systems, In the cold, unemotional world of physical principles, prediction requires judgement, even in lab conditions.

The domains of business and life are nothing like controlled lab conditions. And they’re and not governed by physical laws. These domains are a collection of complex people systems which are governed by emotional laws. Where physics systems delivers predictable outputs for known inputs, people systems do not. Scenario 1. Your group’s best performer is overworked, tired, and hasn’t exercised in four weeks. With no warning you ask them to take on an urgent and important task for the CEO. Scenario 2. Your group’s best performer has a reasonable workload (and even a little discretionary time), is well rested and maintains a regular exercise schedule, and you ask for the same deliverable in the same way. The inputs are the same (the urgent request for the CEO), the outputs are far different.

At the level of the individual – the building block level – people systems are complex and adaptive, The first time you ask a person to do a task, their response is unpredictable. The next day, when you ask them to do a different task, they adapt their response based on yesterday’s request-response interaction, which results in a thicker layer of unpredictability. Like pushing on a bag of water, their response is squishy and it’s difficult to capture the nuance of the interaction. And it’s worse because it takes a while for them to dampen the reactionary waves within them.

One person interacts with another and groups react to other groups. Push on them and there’s really no telling how things will go. One cylo competes with another for shared resources and complexity is further confounded. The culture of a customer smashes against your standard operating procedures and the seismic pressure changes the already unpredictable transfer functions of both companies. And what about the customer that’s also your competitor? And what about the big customer you both share? Can you really predict how things will go? Do you really have control?

What does all this complexity, ambiguity, unpredictability and general lack of control mean when you’re trying to build a culture of accountability? If people are accountable for executing well, that’s fine. But if they’re held accountable for the results of those actions, they will fail and your culture of accountability will turn into a culture of avoiding responsibility and finding another place to work.

People know uncertainty is always part of the equation, and they know it results in unpredictability. And when you demand predictability in a system that’s uncertain by it’s nature, as a leader you lose credibility and trust.

As we swim together in the storm of complexity, trust is the life boat. Trust brings people together and makes it easier to row in the same direction. And after a hard day of mistakenly rowing in the wrong direction, trust helps everyone get back in the boat the next day and pull hard in the new direction you point them.

Image credit – NASA

How do you choose what to work on?

There are always too many things to do, too much to work on. And because of this, we must choose. Some have more choice than others, but we all have choice. And to choose, there are several lenses we look through.

There are always too many things to do, too much to work on. And because of this, we must choose. Some have more choice than others, but we all have choice. And to choose, there are several lenses we look through.

What’s good enough? If it’s good enough, there’s no need to work on it. “Good enough” means it’s not a constraint; it’s not in the way of where you want to go.

What’s not good enough? If it’s not good enough, it’s important to work on it. “Not good enough” means it IS a constraint; it IS in the way; it’s blocking your destination.

What’s not happening? If it’s not happening and the vacancy is blocking you from your destination, work on it. Implicit in the three lenses is the assumption of an idealized future state, a well-defined endpoint.

It’s the known endpoint that’s used to judge if there’s a blocking constraint or something missing. And there are two schools of thought on idealized future states – the systems, environment, competition, and interactions are well understood and idealized future states are the way to go, or things are too complex to predict how things will go. If you’re a member of the idealized-future-state-is-the-way-to-go camp, you’re home free – just use your best judgment to choose the most important constraints and hit them hard. If you’re a believer in complexity and its power to scuttle your predictions, things are a bit more nuanced.

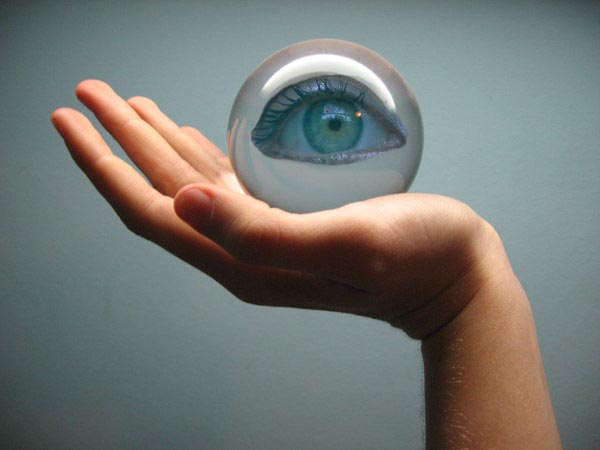

Where the future state folks look through the eyepiece of the telescope toward the chosen nebula, the complexity folks look through the other end of the telescope toward the atomic structure of where things are right now. Complexity thinkers think it’s best to understand where you are, how you got there, and the mindset that guided your journey. With that knowledge you can rough out the evolutionary potential of the future and use that to decide what to work on.

If you got here by holding on to what you had, it’s pretty clear you should try to do more of that, unless, of course, the rules have changed. And to figure out if the rules have changed? Well, you should run small experiments to test if the same rules apply in the same way. Then, do more of what worked and less of what didn’t. And if nothing works even on a small scale, you don’t have anything to hold onto and it’s time to try something altogether new.

If you got here with the hybrid approach – by holding on to what you had complimented with a healthy dose of doing new stuff (innovation), it’s clear you should try to do more of that, unless, of course, you’re trying to expand into new markets which have different needs, different customers, and different pocketbooks. To figure out what will work, runs small experiments, and do more of what worked and less of what didn’t. If nothing works, your next round of small experiments should be radically different. And again, more of what worked, less of what didn’t.

And if you’re a young company and have yet to arrive, you’re already running small experiments to see what will work, so keep going.

There’s a half-life to the things that got us here, and it’s difficult to predict their decay. That’s why it’s best to take small bets on a number of new fronts – small investment, broad investigation of markets, and fast learning. And there’s value in setting a rough course heading into the future, as long as we realize this type of celestial navigation must be informed by regular sextant sightings and course corrections they inform.

Image credit – Hubble Heritage.

How to Make Big Data Far More Powerful

Big Data is powerful – measure what people do on the web, summarize the patterns, and use the information for good. These data sets are so powerful because they’re bigger than big; there’s little bias since the data collection is automatic; and the analysis is automated. There’s huge potential in the knowledge of what people click, what pages they land on, and what place the jump from.

Big Data is powerful – measure what people do on the web, summarize the patterns, and use the information for good. These data sets are so powerful because they’re bigger than big; there’s little bias since the data collection is automatic; and the analysis is automated. There’s huge potential in the knowledge of what people click, what pages they land on, and what place the jump from.

It’s magical to think about what can be accomplished with the landing pages and click-through rates for any demographic you choose. Here are some examples:

- This is the type of content our demographic of value (DOV) lands on, and if we create more content like this we’ll get more from them to land where we want.

- These are the pages our DOV jump from, and if we advertise there more of our DOV will see our products.

- This is the geographic location of our DOV when they land on our website, and if we build out our sales capacity in these locations we’ll sell more.

- This is the time slot when our DOV is most active on their smart phones, and if we tweet more during that time we’ll reach more of them.

But just as there’s immense power knowing the actions of your DOV (what they click on), there are huge assumptions on what it all means. Here are two big ones:

- All clicks are created equal.

- When more see our content, more will do what we want.

Here is an example of three members (A, B, C) of your demographic of interest who take the same measurable action but with different meaning behind it:

Member A, after four drinks, speeds home recklessly; loses control of the car; crashes into your house; and parks the car in your living room.

Member B, after grocery shopping, drives home at the speed limit; the front wheel falls off due to a mechanical problem; loses control of the car; crashes into your house; and parks the car in your living room.

Member C, after volunteering at a well-respected non-profit agency, drives home in a torrential rain 15 miles per hour below the speed limit; a child on a bicycle bolts into the lane without warning and C swerves to miss the child; loses control of the car; crashes into your house; and parks the car in your living room.

All three did the same thing – crashed into your house – but the intent, the why, is different. Same click, but not equal. And when you put your content in front of them, regardless of what you want them to do, A, B, and C will respond differently. Same DOV, but different intentions behind their actions.

Big Data, with its focus on the whats, is powerful, but can be made stereoscopic with the addition of a second lens that can see the whys. Problem is, the whys aren’t captured in a clean, binary way – not transactional but conversational – and are subject to interpretation biases where the integers of the whats are not.

With people, action is preceded by intent, and intent is set by thoughts, feelings, history, and context. And the best way to understand all that is through their stories. If you collect and analyze customer stories you’ll better understand their predispositions and can better hypothesize and test how they’ll respond.

In the Big Data sense, Nirvana for stories is a huge sample size collected quickly with little effort, analysis without biases, and direct access to the stories themselves.

New data streams are needed to collect the whys in a low overhead way, and new methods are needed to analyze them quickly and without biases. And a new perspective is needed to see not only the amazing power of Big Data (the whats), but the immense potential of seeing the what’s with one eye and the whys with the other.

Keep counting the whats with traditional Big Data work – there’s real value there. But also keep one eye on the horizon for new ways to collect and analyze the whys (customer stories) in a Big Data way.

Collection and analysis of customer stories, if the sample size is big enough and biases small enough, is the best way I know to look through the fog and understand emerging customer needs and emerging markets.

If you can figure out how to do it, it will definitely be worth the effort.

Marketing’s Holy Grail – Emerging Customer Needs

The Holy Grail of marketing is to identify emerging customer needs before anyone else and satisfy them to create new markets. It has been a long and fruitless slog as emerging needs have proven themselves elusive. And once candidates are identified, it’s a challenge to agree which are the game-changers and which are the ghosts. There are too many opinions and too few facts. But there’s treasure at the end of the rainbow and the quest continues.

The Holy Grail of marketing is to identify emerging customer needs before anyone else and satisfy them to create new markets. It has been a long and fruitless slog as emerging needs have proven themselves elusive. And once candidates are identified, it’s a challenge to agree which are the game-changers and which are the ghosts. There are too many opinions and too few facts. But there’s treasure at the end of the rainbow and the quest continues.

Emerging things are just coming to be, just starting, so they appy to just a small subset of customers; and emerging things are new and different, so they’re unfamiliar. Unfamiliar plus small same size equals elusive.

I don’t believe in emerging customer needs, I believe in emergent customer behavior.

Emergent behavior is based on actions taken (past tense) and is objectively verifiable. Yes or no, did the customer use the product in a new way? Yes or no, did the customer make the product do something it wasn’t supposed to? Did they use it in a new industry? Did they modify the product on their own? Did they combine it with something altogether unrelated? No argument.

When you ask a customer how to improve your product, their answers aren’t all that important to them. But when a customer takes initiative and action, when they do something new and different with your product, it’s important to them. And even when just a few rouge customers take similar action, it’s worth understanding why they did it – there’s a good chance there’s treasure at the end of that rainbow.

With traditional VOC methods, it has been cost prohibitive to visit enough customers to learn about a handful at the fringes doing the same crazy new thing with your product. Also, with traditional VOCs, these “outliers” are thrown out because they’re, well, they’re outliers. But emergent behavior comes from these very outliers. New information streams and new ways to visualize them are needed to meet these challenges.

For these new information streams, think VOC without the travel; VOC without leading the witness; VOC where the cost of capturing their stories is so low there are so many stories captured that it’s possible to collect a handful of outliers doing what could be the seed for the next new market.

To reduce the cost of acquisition, stories are entered using an app on a smart phone; to let emergent themes emerge, customers code their own stories with a common, non-biasing set of attributes; and to see patterns and outliers, the coded stories are displayed visually.

In the past, the mechanisms to collect and process these information streams did not exist. But they do now.

I hope you haven’t given up on the possibility of understanding what your customers will want in the near future, because it’s now possible.

I urge you to check out SenseMaker.

Experiment With Your People Systems

It’s pretty clear that innovation is the way to go. There’s endless creation of new technologies, new materials, and new processes so innovation can create new things to sell. And there are multiple toolsets and philosophies to get it done, but it’s difficult.

It’s pretty clear that innovation is the way to go. There’s endless creation of new technologies, new materials, and new processes so innovation can create new things to sell. And there are multiple toolsets and philosophies to get it done, but it’s difficult.

When doing new there’s no experience, no predictions, no certainty. But innovation is no dummy and has come up with a way to overcome the uncertainty. It builds knowledge of systems through testing – build it, test it, measure it, fix it. Not easy, but doable. And what makes it all possible is the repeatable response of things like steel, motors, pumps, software, hard drives. Push on them repeatably and their response is repeatable; stress them in a predictable way and their response is predictable; break them in a controlled way and the failure mode can be exercised.

Once there’s a coherent hypothesis that has the potential to make magic, innovation builds it in the lab, creates a measurement system to evaluate goodness, and tests it. After the good idea, innovation is about converting the idea into a hypothesis – a prediction of what will happen and why – and testing them early and often. And once they work every-day-all-day and make into production, the factory measures them relentlessly to make sure the goodness is shipped with every unit, and the data is religiously plotted with control charts.

The next evolution of innovation will come from systematically improving people systems. There are some roadblocks but they can be overcome. In reality, they already have been overcome it’s just that no one realizes it.

People systems are more difficult because their responses are not repeatable – where steel bends repeatably for a given stress, people do not. Give a last minute deliverable to someone in a good mood, and the work gets done; give that same deliverable to the same person on a bad day, and you get a lot of yelling. And because bad moods beget bad moods, people modify each other’s behavior. And when that non-repeatable, one-person-modifying-another response scales up to the team level, business unit, company, and supply chain, you have a complex adaptive system – a system that cannot be predicted. But just as innovation of airliners and automobiles uses testing to build knowledge out of uncertainty, testing can do the same for people systems.

To start, assumptions about how people systems would respond to new input must be hardened into formal hypotheses. And for the killer hypotheses that hang together, an experiment is defined; a small target population is identified; a measurement system created; a baseline measurement is taken; and the experiment is run. Data is then collected, statistical analyses are made, and it’s clear if the hypothesis is validated or not. If validated, the solution is rolled out and the people system is improved. And in a control chart sense, the measurement system is transferred to the whole system and is left to run continuously to make sure the goodness doesn’t go away. If it’s invalidated, another hypothesis is generated and the process is repeated. (It’s actually better to test multiple hypotheses in parallel.)

In the past, this approach was impossible because the measurement system did not exist. What was needed was a simple, mobile data acquisition system for “people data”, a method to automatically index the data, and a method to quickly process and display the results. The experimental methods were clear, but there was no response for the experiments. Now there is.

People systems are governed by what people think and feel, and the stories they tell are the surrogates for their thoughts and feelings. When an experiment is conducted on a people system, the stories are the “people data” that is collected, quantified, and analyzed. The stories are the response to the experiment.

It is now possible to run an experiment where a sample population uses a smart phone and an app to collect stories (text, voice, pictures), index them, and automatically send them to a server where some software groups the stories and displays them in a way to see patterns (groups of commonly indexed stories). All this is done in real time. And, by clicking on a data point, the program brings up the story associated with that data point.

Here’s how it works. The app is loaded, people tell their stories on their phone, and a baseline is established (a baseline story pattern). Inputs or constraints are changed for the target population and new stories are collected. If the patterns change in a desirable way (statistical analysis is possible), the new inputs and constraints are rolled out. If the stories change in an undesirable way, the target population reverts back to standard conditions and the next hypothesis is tested.

Unbiased, real time, continuous information streams to make sense of your people systems is now possible. Real time, direct connection to your employees and your customers is a reality, and the implications are staggering.

Thank you Dave Snowden.

The Complexity Conundrum

In school the problems you were given weren’t really problems at all. In school you opened the book to a specific page and there, right before you in paragraph form and numbered consecutively, was a neat row of “problems”. They were fully-defined, with known inputs, a formal equation that defined the system’s response, and one right answer. Nothing extra, nothing missing, nothing contradictory. Today’s problems are nothing like that.

In school the problems you were given weren’t really problems at all. In school you opened the book to a specific page and there, right before you in paragraph form and numbered consecutively, was a neat row of “problems”. They were fully-defined, with known inputs, a formal equation that defined the system’s response, and one right answer. Nothing extra, nothing missing, nothing contradictory. Today’s problems are nothing like that.

Today’s problems don’t have a closed form solution; today’s problems don’t have a right answer. Three important factors come into play: companies and their systems are complex; the work, at some level, is always new; and people are always part of the equation.

It’s not that companies have a lot of moving parts (that makes them complicated); it’s that the parts can respond differently in different situations, can change over time (learn), and the parts can interact and change each others’ response (that’s complex). When you’re doing work you did last time, there’s a pretty good chance the system will perform like it did last time. But it’s a different story when the inputs are different, when the work is new.

When the work is new, there’s no precedent. The inputs are new and the response is newer. Perturb the system in a new way and you’re not sure how it will respond. New interactions between preciously unreactive parts make for exciting times. The seemingly unconnected parts ping each other through the ether, stiffen or slacken, and do their thing in a whole new way. Repeatability is out the window, and causal predictability is out of the question. New inputs (new work) slathers on layers of unknownness that must be handled differently.

Now for the real complexity culprit – people. Companies are nothing more than people systems in the shape of a company. And the work, well, that’s done by people. And people are well known to be complex. In a bad mood, we respond one way; confident and secure we respond in another. And people have memory. If something bad happened last time, next time we respond differently. And interactions among people are super complex – group think, seniority, trust, and social media.

Our problems swim with us in a hierarchical sea of complexity. That’s just how it is. Keep that in mind next time you put together your Gantt chart and next time you’re asked to guarantee the outcome of an innovation project.

Complexity is real, and there are real ways to handle it. But that’s for another time. Until then, I suggest you bone up on Dave Snowden’s work. When it comes to complexity, he’s the real deal.

Image credit – miguelb.

Mike Shipulski

Mike Shipulski